- De

- En

Our goal in this project is to remove the separation of main memory and non-volatile memory and bring them together in a distributed computing system under a unified interface so that different classes of storage media can be used flexibly in HPC workflows.

This goal is implemented through the following steps:

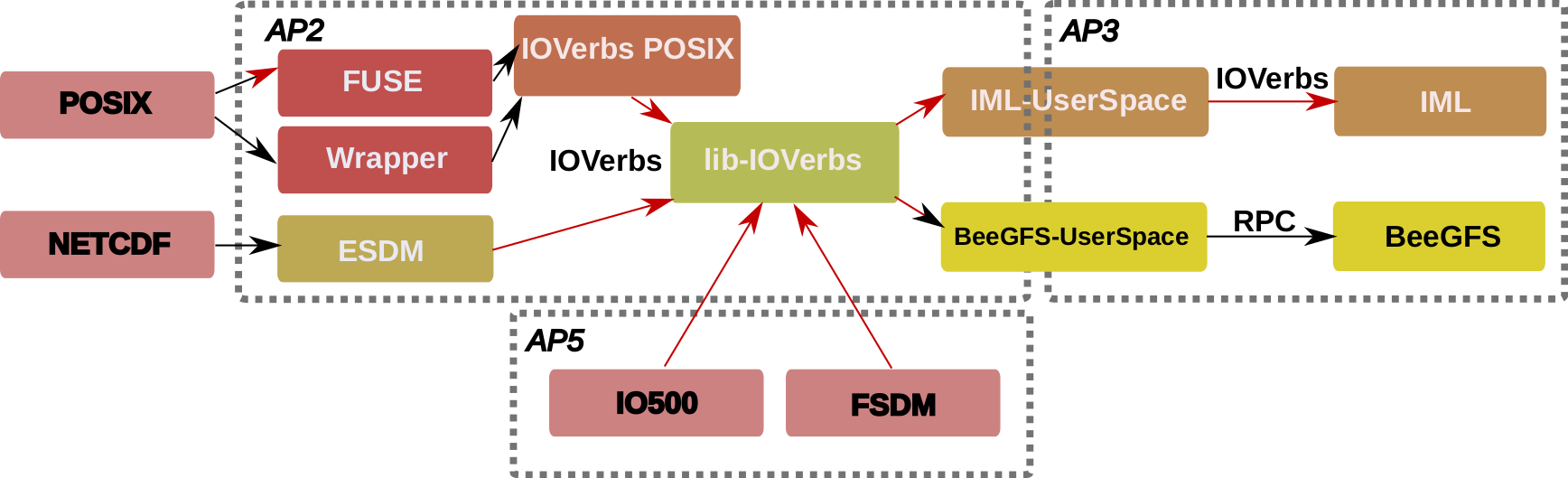

First, a low-level API, the IO verbs, will be created, which allows to map elementary input/output (I/O) of parallel applications. Inspired by the Infiniband verbs1), the IO verbs should be able to express I/O semantics for specific I/O usage scenarios so that they can be used by different memory systems in a highly efficient way. Examples include consistency requirements for sequential I/O and parallel I/O, and their mapping to block- or byte-addressable memories. The result is the definition and implementation of IO verbs as a construction kit for elementary I/O operations that can be used both independently and by domain-specific higher-level APIs to define and implement their I/O requirements. To this end, the systematic investigation of alternative interfaces for elementary input/output (I/O) of parallel applications will first take place. Analogous to POSIX, any application, especially parallel applications, should be able to address memory systems via the IO verbs interface. To improve the acceptance, the I/O of existing applications shall also be supported with a compatibility layer. For this purpose, the POSIX interface is to be mapped transparently to IO verbs and corresponding consistency requirements are to be described with the IO verbs. A direct interface to work with byte-addressable memory objects will also be part of the IO-Verbs.

Furthermore, based on the IML system (Infinite Memory Layer) of Fraunhofer ITWM, an ecosystem for highly efficient and memory-centric input/output for application scenarios in campaigns2) are being developed. IML is used in this project and extended with I/O interfaces, metadata and persistence. The IML layer presents user applications with an abstract linear memory and is able to hide the actual storage media (DRAM, NVRAM). This view enables applications to share data in a consistent, reliable, and fast manner. Further development of IML and coupling with the IO verbs will result in a stand-alone product - the Memory-Centric Storage System (MCS2), which supports both volatile and non-volatile memory from different manufacturers and technologies. MCS2 additionally serves as the first demonstration of the relevance of IO-Verbs for high-performance storage solutions of the future.

For the integration of HPC workflows and campaigns, an execution model shall be implemented that efficiently manages the data for communication and keeps intermediate and final results persistent in memory-centric storage. In the context of a campaign, multiple applications are to be launched in sequence and utilize compute and storage resources from a dedicated set of compute and storage nodes. Traditionally, the communication or coupling between applications of a campaign takes place via a data store. Previous solutions are based on classical parallel file systems. With MCS2 a user has to specify the requirements of such a campaign, the parallel applications to be executed as well as necessary migration processes. For this purpose, the HPC API HPCSerA developed at GWDG is to be extended to include migration between IML and shared storage. The goal is that via MCS2 the necessary data migration processes between the different storage media, such as NVMe and the parallel file system, can be defined, managed and realized and manual data migration between user jobs is no longer necessary.

The project will significantly improve the scalability of CODA’s I/O to execute a new class of problems in a scalable manner.

The potential of the new solution approaches will be validated in the project. The synthetic benchmarks of IO500, a NetCDF benchmark, and the I/O intensive parallel flow solver CODA serve as test scenarios. Different semantics of the IO verbs will be evaluated.

The architecture of the I/O layers that we aim for in the MCSE project is sketched here:

01.10.2022 - 30.09.2025

Diese Projekt wird vom BMBF unter der Fördernummer 16ME0663K gefördert.